CASE STUDY: Coded Bias

Official Synopsis: When MIT Media Lab researcher Joy Buolamwini discovers that many facial recognition technologies do not accurately detect darker-skinned faces or classify the faces of women, she delves into an investigation of widespread bias in algorithms. As it turns out, artificial intelligence is not neutral, and women are leading the charge to ensure our civil rights are protected. Coded Bias is a groundbreaking feature documentary that explores timely questions about technology and civil rights: What does it mean when artificial intelligence increasingly governs our liberties? And what are the consequences for the people artificial intelligence is biased?

Type of project: Documentary Feature

Director: Shalini Kantayya

Writers: Shalini Kantayya (written by); Paul Rachman, Christopher Seward (story consultants)

Producers: Shalini Kantayya (producer); Michael Beddoes (UK Field Producer); Sabine Hoffman (co-producer)

Budget: Undisclosed

Financing: 7th Empire Media

Shooting Format: Digital

Screening Format: DCP

World Premiere: Sundance Film Festival (January 2020)

Distributor: The Alfred P. Sloan Foundation’s “Science on Screen” initiative (virtual cinemas); public television (broadcast premiere); Netflix (streaming)

Website: Official Site

DEVELOPMENT & FINANCING

“I’ve always been fascinated with disruptive technologies and whether they make the world more fair or less fair,” says writer/director/producer Shalini Kantayya, in an interview for Film Independent. “With this film, I sort of stumbled down the rabbit hole,” citing Cathy O’Neil’s 2016 book Weapons of Math Destruction as one of her initial sources of obsession with pertaining to the field of artificial intelligence. Another was eventual Coded Bias subject Joy Buolamwini’s Ted Talk “How I’m Fighting Bias in Algorithms,” also from 2016. She continued researching the issue, reading books by technology experts including Meredith Broussard, Safiya Noble and Virginia Eubanks.

“What I found was this dark underbelly with the technologies that we interface with every day,” Kantayya says—namely the fact that the artificial intelligence tech already on shelves (and being used by law enforcement) is already suffused with racial and gender bias. “I didn’t realize until I made this movie that AI is already determining who gets hired, who gets healthcare, who gets undue police scrutiny,” adding: “This is one of the biggest civil rights issues of our time.”

Says tech researcher and Coded Bias consultant/interviewee Meredith Broussard, from the same interview: “We have these ideas about AI that are fantasy based on Hollywood, but the reality of AI is quite different. We imagine that AI is Terminator and self-driving cars that are going to take us to work on demand. What we have about AI that’s real is that it’s just math, very complex and beautiful math, but just math. And [that math] is created by people—people with unconscious bias. And their biases are embedded in the technology they create.”

With a plan for a long-term shoot to occur intermittently—in typical nonfiction-film fashion, as subjects became available and/or storylines developed—Kantayya produced the film through her own company 7th Empire Media, whose previous films include Catching the Sun, Drop of Life and Campaigns.

PRODUCTION

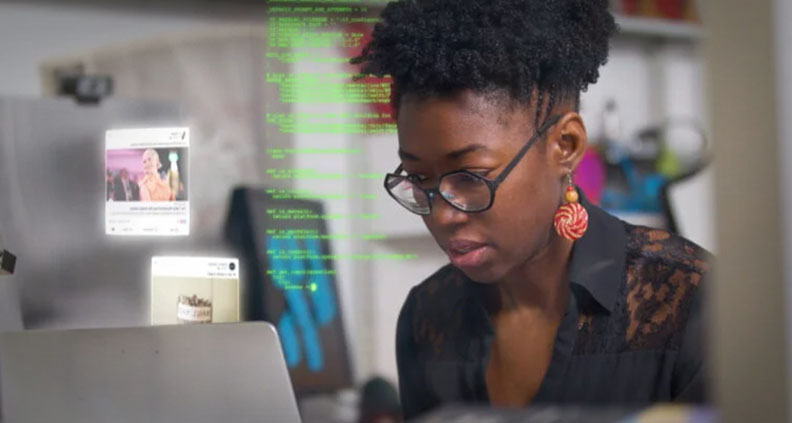

“One of the things I’m really proud of in the film is the science communication,” says Kantayya. “It’s [difficult] to take someone as brilliant as Meredith or one of the other seven PhDs in the film and try and distill their research into something that you can digest in small bites.” To engage general audiences with the complex subject matter, Coded Bias references Hollywood’s long (and rarely accurate) history of depicting AI with Hollywood film clips and a propulsive cinematic aesthetic. “I’m just a kid of sci-fi,” the filmmaker says, “Everything I knew about AI prior to making this film came from these sci-fi creators. So I feel like I pull from that visual language of what we imagine AI to be.”

Varying levels of prevalence, consumer awareness and regulation of facial recognition technology around the world led the New York-based Kantayya to head overseas for filming. “I tend to be someone who thinks about things from different global perspectives,” she says. “In this case, I was especially fascinated with these different approaches to data protection,” leading her to film vignettes in the UK, China and the US, and even describing the Chinese section of the film as “a Black Mirror episode inside of a documentary.”

For this particular section of the film—spotlighting a women who has offloaded many of life’s basic functions to smart technology—the key, as with all nonfiction films, was to earn the subject’s trust. “I think it helps that she [the subject] speaks in support of facial recognition and how convenient it is.” The fact that this wasn’t happening in a “galaxy far, far away” but rather present day is chilling, she says. “I think in China, it would have been dangerous for someone to speak out against the facial recognition system,” she adds.

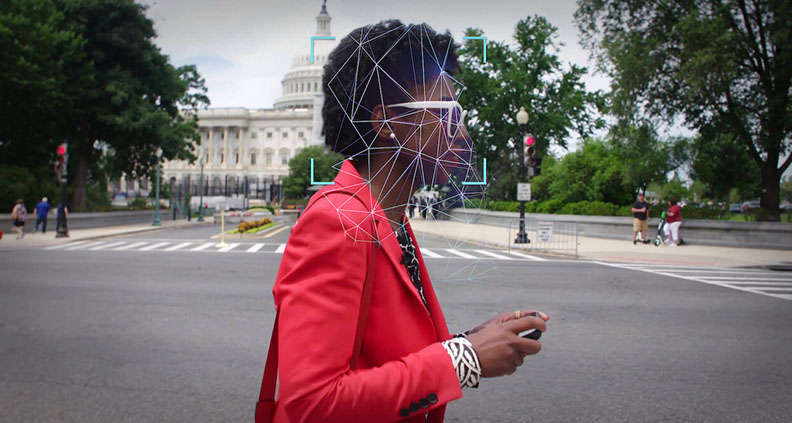

In an interview with Forbes, Kantayya describes one telling moment during shooting with primary subject Joy Buolamwini: “I had the experience of standing next to Joy and the computer could see my face, and the computer could not see her face. Even in the film, I don’t think it could capture how I felt in that moment, because it really felt like, ‘When the constitution was signed, Black people were three-fifths of a human being. And here we’re sitting at a computer who’s looking and doesn’t see Joy as a human being, doesn’t recognize her face as a face.’ To me, that was a stark connection of how racial bias can be replicated.”

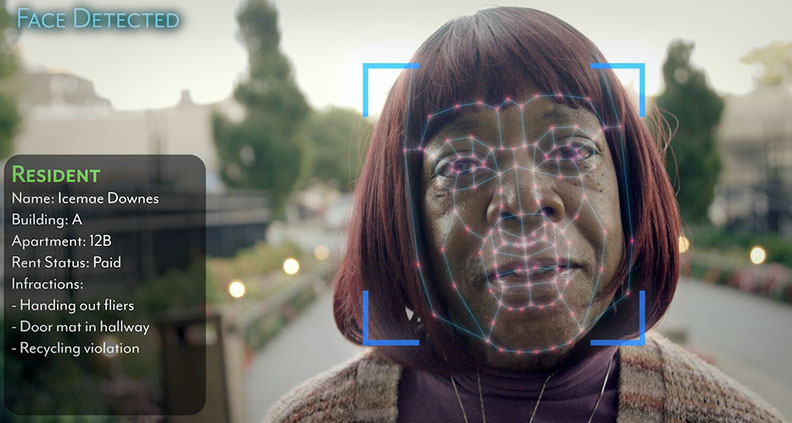

Speaking to HotDocs about the use of digital effects to replicate the look and feel of surveillance cameras throughout the film, Kantayya says: “One of the challenges in making my film was how we make invisible systems visible to people. Trying to show the point of view and the voice of the AI became really important in the film. Through all this technology, through graphic effects, I’m trying to show the capabilities that already exist today.”

FESTIVAL STRATEGY

Coded Bias premiered at the 2020 Sundance Film Festival in the U.S. Documentary competition and was an official selection at SXSW, HotDocs, CPH:DOX, San Francisco International Film Festival, AFI Docs, Full Frame, Mill Valley and others, including such social justice-oriented venues as NY Human Rights Watch. Award wins include Best Director (Feature Documentary) at the Social Impact Media Festival and the Visionary Filmmaker Award at Boston’s GlobeDocs Film Festival, not to mention additional nominations and technical honors from a variety of film bodies worldwide.

Off the strength of its festival run and subsequent release, Coded Bias also earned a nomination for Best Documentary at 52nd NCAA Image Awards, alongside Attica, My Name is Pauli Murray, Summer of Soul, Tina and eventual winner Barbara Lee: Speaking Truth to Power.

THE SALE & RELEASE

Released during the height of the COVID-19 lockdown, Coded Bias’s “theatrical” release was part of the Sloan Foundation’s Science on Screen initiative and was shown in 80+ virtual independent cinemas nationwide. The film was regularly featured as part of “Best of Sundance” programming in select cinemas, and is being distributed for educational use by Women Make Movies and was broadcast nationally on PBS’s Emmy-Award winning series Independent Lens.

Industry giants Netflix subsequently acquired the film’s streaming rights and launched Coded Bias on its global platforms in April of 2021. In collaboration with the student-run Institute for Digital Humanity at Minnesota’s North Central University, the filmmakers are currently designing a curriculum based on the film that they hope to provide as a free resource for students and educators.

From the filmmakers’ impact report: “A central aim of the campaign has been to further education around Artificial Intelligence. The appetite from teachers for in-class support has been demonstrated by frequent requests to screen Coded Bias in schools and universities… Coded Bias aims to educate and engage audiences with accurate information about how AI impacts civil liberties in economic opportunity, health care, criminal justice and misinformation.”

Lasting impact: From the impact report: “Coded Bias has developed a Declaration of Data Rights as Human Rights, to provide a framework to understand data rights as human beings. Over a hundred thought leaders, organizers and institutions have signed the pledge. The film is working with Amnesty International to develop a media strategy for the Declaration of Data Rights and Human Rights.”

The 2022 Sloan Film Summit is happening April 8-10 in Los Angeles. Stay tuned to sloanfilmsummit.org for videos, blog recaps and more. Follow our coverage on Film Indepenent’s Twitter, Facebook and Instagram.